Rise of the modular monolith

or simply - modulith

In today’s cloud-powered world there seem to be two (very roughly) predominant ways to architect your distributed software system - serverless and microservices (where definition of what “micro” means is very loose and per-organization subjective).

Note that serverless is not a topic of this post as it is a whole different beast which we will not grapple with here.

While programming in the world of microservices I noticed few factors which significantly impacted the probability of success with this architecture. I will mention two of those which I felt were the most impactful ones.

Organization’s microservice readiness/maturity

One of the important factors to take into account is the level of maturity of your organization in regards to microservices and its readiness to adopt this architecture. This might significantly influence things such as:

how fast can you ship a microservice from a concept to production,

how simple the integration between your microservices will be,

how many dependencies your product teams might have on your dev ops teams,

how well production incidents are handled (MTTA, MTTR and other MTxxses)

etc.

In practice, it can easily happen that you will struggle with all of these and more, as not all organizations have battle-tested microservice templates, out of the box CI/CD pipelines, one-click-to-spin-up testing environments, established contract definition/testing practices, out of the box SLOs observability with error budgeting and many more.

At this moment on the software engineering timeline having all these things in place requires assigning people to build them and foster their adoption - which means investing money in it. In the future, things will for sure evolve in the direction where cloud vendors might take over more and offer all of these concerns out of the box (or even more than they are doing now). But right now microservices still require you to have a dedicated infra/devops/core/platform/whatever team that will handle all this and serve your product teams.

Naturally, as your organization implements more and more of these cross-cutting practices, standards and infrastructure, ease of development with this architecture should improve. However, as mentioned already, not all companies are there yet. In fact, probably majority are not.

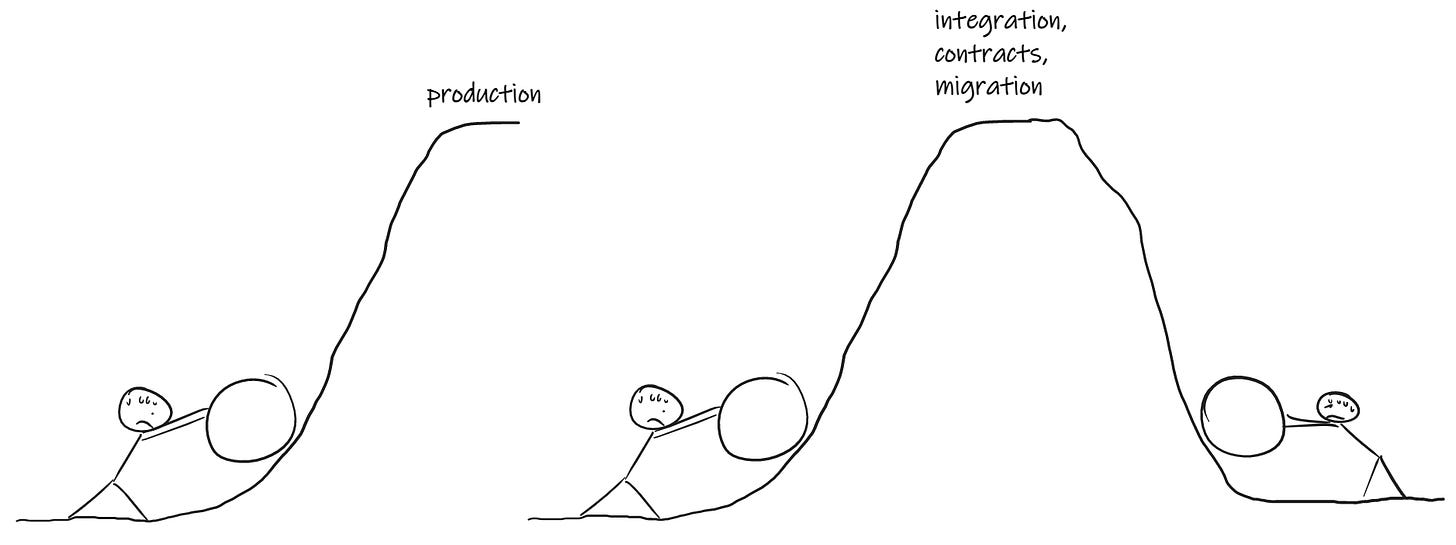

This means that, if you are a part of an organization not fully bootstrapped to work with microservices efficiently, any feature that might require you to:

spin up a new microservice,

implement a scenario which spans across multiple microservices,

change the contract between services in a backward incompatible way,

migrate a capability from one service to another,

will certainly have a significant overhead of boilerplate work that will slow you down and impair your time to market.

Now someone might say something along these lines “Well if you get boundaries of your microservices and contracts between them right from the start, you will not have any of these problems”. Which leads us to the second important factor…

Postponing things

Having an architecture that will work well only if you get everything right from the start is not a good architecture.

I have seen several times organizations attempting to do a big design up front (BDUF) just to fail miserably months/years later when they learn new requirements (functional or non-functional) or new constraints they did not know about when they started BDUF-ing. The investment in the original architecture at that point is usually quite significant and in most cases it is hard for the company to just give up and let go (due to the sunk cost fallacy). The thing that usually happens then is that developers are being forced to squeeze use cases that just won’t fit into the rigid architecture that was BDUF-ed by an ivory tower architect think tank. This almost certainly leads to failure, frustration and money loss.

When designing a software system we are always trying to design for failure. We try to make our systems resilient and think of “what-if” scenarios where we make sure that our users get served even in the event of a zombie apocalypse or a meteorite slamming into our data center. We know that things will fail for sure and from the very beginning we design for those failures.

What if we take the same principle that we are applying to our servers and infrastructure and apply it to ourselves (humble Homo Sapiens programmers). So before we start architecting and designing anything at all, let’s state the obvious:

We will for sure fail to determine correct microservice boundaries.

We will fail miserably at determining proper contracts between microservices.

Our DDD-ing will be a long marathon full of failures, mistakes and bloopers until we get something decent enough to call it a domain model that solves our business problem.

All this implies that we are much more fallible at the beginning of the software system’s lifecycle than at its later stages. Knowledge inevitably grows more as time goes by - you simply always know more tomorrow than today. So why would you then increase the cost of fixing the mistakes you know you will make, at the moment in time you know majority of those mistakes will happen?

If you have chosen microservices as the target architecture for your organization and microservice maturity and readiness are not there yet, how do you tactically make sure that the cost of the above mentioned errors (which you will for sure be making) is low?

Modular monolith (aka modulith)

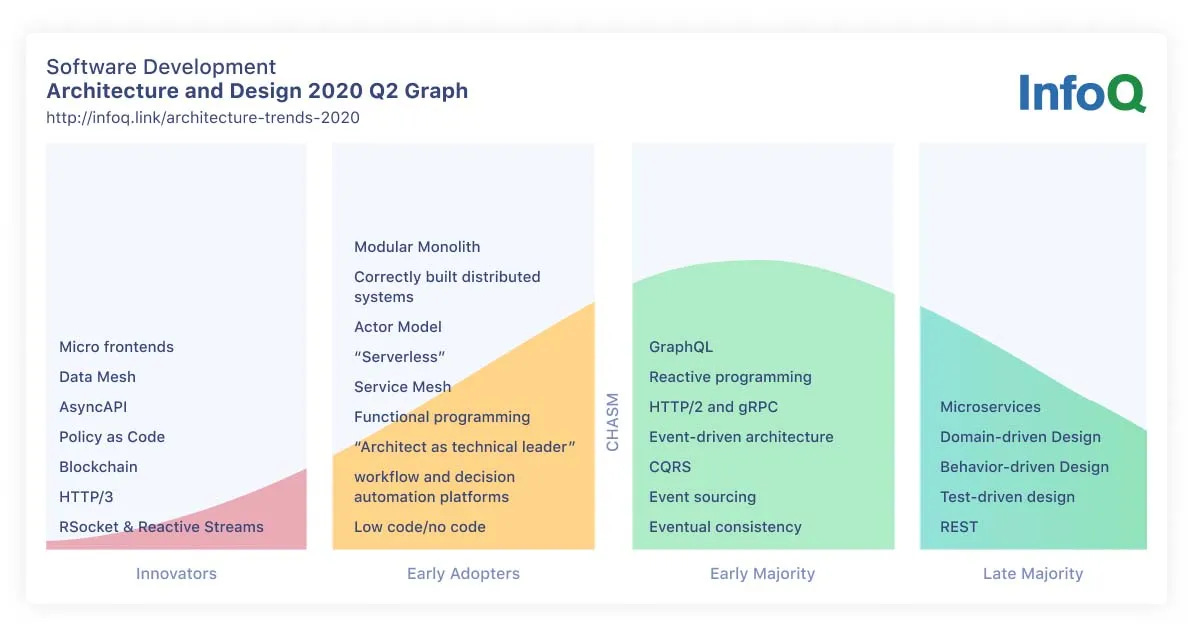

Let’s take a step back and look at the architecture trends in the last two years. Majority of companies are indeed adopting microservices for architecting their software systems. For example, compared to the serverless architecture that was used only by early adopters in 2020, microservices architecture was already used by the late majority in 2020.

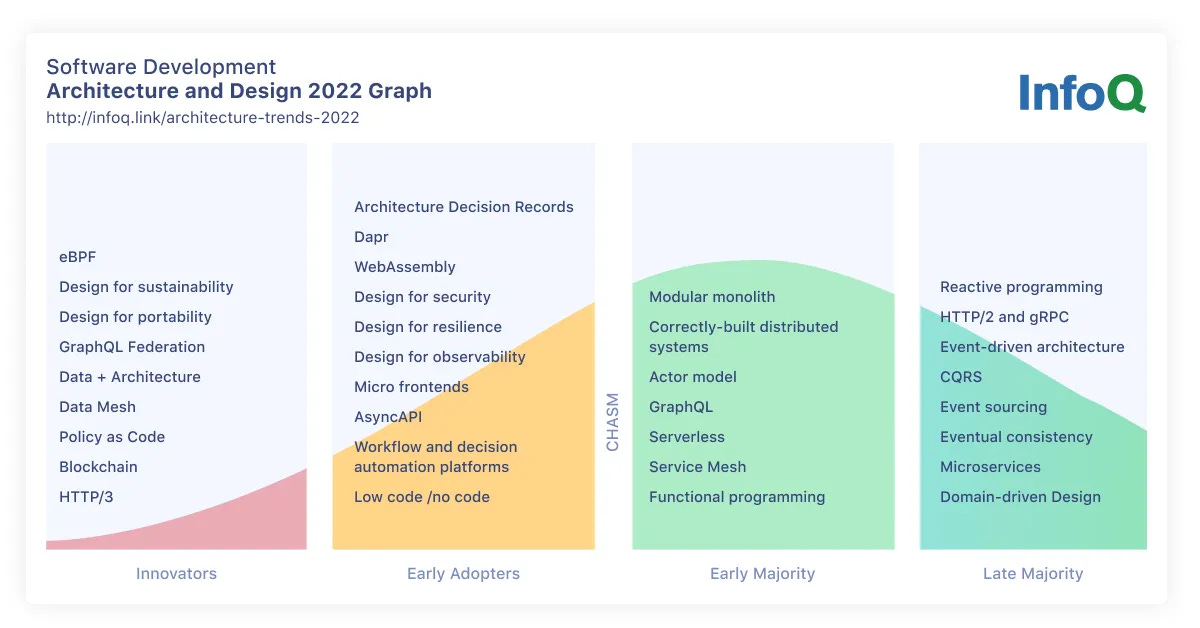

In 2022 trends moved a bit and now serverless is starting to be adopted by the early majority and moving towards the late majority.

On an unrelated note, it is nice to see ADRs used by the early adopters. Curious to see whether they will reach majority in the software development landscape in the years to come.

One interesting thing to note is that together with the serverless architecture (but with significantly less hype) there is one more mover in the same quadrant. This is the modular monolith. Seeing modular monolith’s adoption moving in the same pace as serverless architecture was at first a bit surprising for me for two reasons:

First one, as I mentioned already, is simply because of the lack of hype. When I did a quick google search for “serverless architecture” at the moment of this writing I got about 12M results. When I did the same for “modular monolith” term I got about 2M results. So the diff size in the results is roughly an order of magnitude. Also results for serverless architecture reveal quite some conferences solely dedicated to this topic. Needless to say I found no conferences solely dedicated to the modulith 😊.

Second one is the lack of “corporate sponsorship”. All three major cloud vendors are advertising serverless architecture as part of the promotion of their serverless offerings - AWS lambdas, Azure functions and GCP’s cloud functions. Of course, there are no corporations pushing for the modular monolith (at least not that I know of).

So how is the software development industry adopting the modular monolith at the same pace as serverless architecture then?

I can not answer this question with certainty, so will just make a guess. Certainly there is a percentage of companies that started with a modular monolith right from the very beginning and have only a single deployable of their software. Apart from that, my guess is that also a large number of companies that actually started with microservices, instead of ending up with a lot of small-scoped services as the hype promised they would, ended up with several modular monoliths. This is just a guess though, take it with a huge grain of salt.

Whatever it is it seems that the adoption of the modular monolith is in some way a very organic process. As there is not much corporate incentive to push for it nor it has a “coolness” and hype factor that microservices and serverless architectures have - it all seems very spontaneous.

Now to come back to the cost of architecture mistakes that we talked about earlier. The majority of mistakes that you will be making when building and refining your domain model will be related either to your service boundaries or to the contract between services. Again, we are not trying to avoid these mistakes (as they are practically inevitable). We are trying to reduce their cost. Ideally we want this cost to be super cheap so that you can have the flexibility to keep failing and keep refactoring until you get it right.

This is where modular monolith can tactically guide you and lead you all the way from a vague concept of your system (or a subsystem) to the fully blown microservices architecture. With the modular monolith you create a module for each of the components for which you are suspecting that it might have a need to be an independent service in the future. By the definition of a monolith - you deploy all these components together in one go. Then, as time goes by, you incorporate new knowledge, use cases and requirements in those components and you keep refactoring your domain model. Sometimes you change a contract between components, sometimes you change the boundaries of the components themselves, but you do everything with low-effort atomic changes and a single deploy.

Once there is a need to extract a component to a separate service, you dedicate some effort and do that. At that moment your modular monolith has given a birth to a fully-fledged microservice.

Note that a “need” here does not strictly need to come from the business. It can of course come from independent scalability/performance requirements. But, more importantly, I want to emphasize that the need for extracting a microservice can come from development also. For example if your modular monolith takes 1h to build, starts crashing your IDE and makes your life miserable, raise this as a priority, push for it and extract your component to an independent microservice. Developer experience is also a factor that should be taken into account when making these decisions.

Here I am referring to an organization that has already identified microservices as it’s target architecture based on its scaling requirements. In that case it is important that you treat your modulith as an incubator or a womb for your microservices - not as a good old-fashioned monolith. Not every monolith that simply has modules is a modular monolith. The boundaries between those modules, their integration and independence should fully resemble a true independent microservices architecture and not a monolithic application. This is because, apart from the cost of refactoring your domain model that I keep mentioning, you also want to reduce (or spread out) the cost of actually extracting an independent module into a dedicated microservice once the time comes. These are the two cost factors you are aiming to keep low in order for your teams to be agile, ship fast and respond to market changes quickly and still be able to match your scalability requirements. So:

you want your teams to be able to refactor their domain models with low effort (as this refactoring happens often) and

you want your teams to be able to extract bounded contexts (or even finer grained components) to independent services once the time comes without that being a years-long epic saga that will suck the life out of everyone.

My (very subjective) view is that the modular monolith should not be perceived as your target architecture. It should instead serve as an intermediary incubator giving birth to your microservices. This incubator will help you reduce the domain refactoring cost and hexagonal architecture provides you with a way to structure your modular monolith in a way that will make sure cost of microservice extraction is low.

In this article I wanted to cover the latter. However, I seem to have a tendency to babble a lot, so my intro to the topic of a “modular hexagon” turned into a full blown article about the rise of a modular monolith.